1. Policies and regulations for driverless cars are not yet complete

At present, the biggest challenge for the popularization and application of unmanned vehicles is that the public has low acceptance and low trust in their safety and reliability. However, because the country's policies and regulations on driverless cars have not yet been perfected, driverless cars cannot yet be driven on open roads.

In view of the above two points, the National Innovation Center took the lead and integrated industry resources to jointly create an L4 driverless car, which is currently operating stably in the Shougang Park.

System overall scheme

2. Design and implementation of unmanned driving system

The unmanned driving system obtains environmental information related to driving tasks such as the vehicle itself, surrounding obstacles and roads through a variety of on-board sensors, and provides this information to decision-making planning, which then obtains environmental information and vehicle based on perception and positioning. State and user needs, plan a suitable path, and then use this information to control the driving state of the vehicle.

Different autonomous driving levels and operating environments have different implementation schemes for autonomous driving. Aiming at the characteristics of tall buildings, luxuriant trees and complex road conditions in Shougang Park, this project proposes a sensor solution based on 3 lidars, 1 millimeter wave radar, 2 cameras, 12 ultrasonic radars and 1 integrated navigation unit. Program.

Sensor installation location

2.1 Sensor

Of the 3 lidars, 32-line lidars are arranged on the top of the vehicle, and two 16-line lidars are arranged on both sides of the top of the vehicle. It is used to detect the environmental information and obstacle information around the vehicle, and obtain the size and orientation information of the obstacle. It has the advantages of high ranging accuracy, accurate azimuth, wide measurement range and strong anti-interference ability.

The millimeter-wave radar adopts a 77GHz mid- and long-range radar, which is arranged inside the front bumper of the vehicle. It is used to detect the moving target in front of the vehicle and obtain the target's speed and orientation information. It has good speed and distance measurement capabilities, and is less affected by the outside world, and can work around the clock.

The main camera is arranged on the top of the vehicle, and the front vision camera is pasted on the inner middle of the windshield to detect obstacle information, road information, sign information and traffic light information in front of the vehicle, and obtain obstacle types and road environment information. It has the advantage of accurately classifying obstacles.

Twelve ultrasonic radars are arranged around the vehicle (4+4 front and rear, 2+2 left and right), which are used to detect short-distance obstacle information around the vehicle to ensure that unmanned vehicles can enter and exit the warehouse autonomously.

Two GPS antennas are arranged on the top of the vehicle, and one inertial navigation unit is arranged in the trunk of the vehicle. Used to obtain the pose and positioning information of the vehicle.

2.2 Software architecture of unmanned driving system

This project uses a pure electric vehicle as a platform and is equipped with the above five sensors to achieve accurate perception of road environment information, and integrates this information through multi-sensor information fusion technology to reduce the probability of misjudgment and improve the stability and accuracy of information output.

Designed and developed multi-sensor fusion algorithm, positioning combination algorithm, decision planning algorithm and vehicle control algorithm to realize autonomous car following, autonomous overtaking and merging, lane keeping, automatic traffic intersection, obstacles on the roads of open and closed parks To avoid functions such as evasion, we wrote test cases for unmanned driving, formulated test specifications for unmanned driving, and completed the test of unmanned driving system.

In order to achieve this goal, the unmanned driving system software architecture is divided into sensor interface layer, perception layer, positioning layer, decision-making layer and vehicle control layer.

The sensor interface layer includes the inputs of various peripheral sensors. The perception layer collects data from various sensors, performs multi-level and multi-space information complementary and optimized combination processing, and finally realizes the full-scale perception of the surrounding environment.

Multi-sensor fusion scheme

Positioning layer: Construct a global map based on the data information of lidar and integrated navigation unit. The sensing results of lidar, camera, millimeter wave radar, and ultrasonic radar are fused and processed to establish a local map centered on the moving vehicle. And through the superposition of GPS information, vehicle location and attitude information. Provide a real-time comprehensive map for intuitive understanding of various information processing results in the driving environment.

Decision-making layer: in the global environment, relying on road network, task and positioning information to generate a global path; in the local environment, relying on perceptual information and under the constraints of traffic rules, infer reasonable driving behavior in real time, and generate safe driving Area;

Generate smooth possible driving routes according to vehicle speed and road complexity; analyze static and dynamic obstacles and traffic regulations to form local path planning, and form driving strategy decisions and send them to the vehicle control layer. At the same time, it handles and recovers system failures and accepts high-level control.

Vehicle control layer: According to the results of path planning and various sensor information inside the vehicle, it generates control commands for the vehicle's gear position, throttle and direction to keep the vehicle running at a high speed and realize autonomous driving.

Software system architecture diagram

3. The design and implementation of the human-computer interaction system of human-car-cloud trinity

The human-computer interaction system is a threshold for driverless cars to be put into commercial use. It is of great significance to the driverless car industry and users [2]. At present, users are still in a novel and skeptical attitude towards driverless cars, and they are far from reaching the level of trust and acceptance. In this context, the man-machine interface of driverless cars becomes more important.

It needs to be a bridge for communication between users and cars, allowing users to understand the real-time conditions of the car, and creating a safe driving experience for users; it also needs to help users establish a sense of trust with driverless cars, and make users more harmonious. Transition from traditional cars to the era of unmanned driving [3].

The research goal of the human-computer interaction subsystem of this project is to meet the user's driving needs and create a safe and convenient driving experience for users through the reasonable design of the driver's human-computer interaction interface.

3.1 Function overview

Unmanned vehicles run on a fixed driving route in the park. A series of stops will be set up along the route. Each stop sign has a QR code for car appointment. Passengers located near any stop can scan the stop sign Enter the car-hailing interface with the QR code, select the starting place and destination, and click OK to place the order successfully.

The order is sent directly to the cloud, and the cloud dispatches the unmanned vehicles in operation according to the order situation. At the same time, it sends the operation route of the order to the mobile phone and the vehicle. The unmanned vehicle picks up passengers from the starting point according to the received operation route. , And delivered to the designated destination, users can evaluate the service based on driving experience, and the order is completed.

3.2 System design

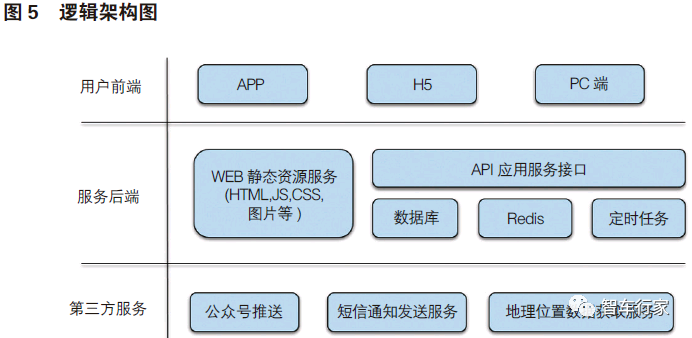

This system adopts B/S, C/S multi-layer architecture, supports multiple network access methods. The user terminal adopts browser, H5 and APP, reducing the workload of system installation and maintenance; users are simple to use, no training is required; system expansion Easy; supports remote business processing. Business logic runs on the server side, making full use of the server's processing capabilities;

By combining Web load balancing, component load balancing, etc., servers can be expanded horizontally to enable the system to handle more service requests and meet the growing system performance requirements.

Logical architecture diagram of human-computer interaction subsystem

System interaction data 4. Design process construction

Based on the user-centered design concept, the information of driverless cars is as transparent as possible, helping users to easily judge the reliability of the car, thus establishing a sense of responsibility for the car, and promoting the public's acceptance of driverless cars. Promote driverless cars into people's lives.

Design Flow

Conclusion

With the rapid development of Internet technology and artificial intelligence technology, completely unmanned driving is not out of reach. In addition to the limitations of technologies such as sensors and computing platforms, the implementation of driverless technology also depends on the public's recognition and trust in driverless technology.

From the perspective of promoting the implementation of unmanned driving, this paper adopts a user-centered design concept and uses the use scenarios of the technology Winter Olympics to design and realize unmanned driving and its operating system. The system has been operating stably in the Beijing Shougang Park for 5 months and has been widely praised by the public.

In the future, with the advent of the era of intelligent connectivity, driverless cars will cover more people, and their implementation will also develop from bicycle intelligence to vehicle-road collaboration. Human-computer interaction will continue to innovate, and landing scenarios will be more abundant.

Optical Photoelectric Cleaner Machine

Automatic Pcba Cleaning Machine,Pcba Cleaning Drying Machine,After Dip Pcba Cleaner Machine,Online Pcba Cleaning Equipment

Dongguan Boyish Electronic Technology Co., Ltd. , https://www.electronic-smt.com